Deafness is the world ‘s third most common disease. To cope with this, several research and new technology are being adopted. One such is bone conduction, which we will discuss in detail in this post.

What are the bone conduction headphones and the way does it work? However, will hearing in each ear help me to hear better? Well, we have got the answers to those queries and then some.

INTRODUCTION

Bone conductivity could be a method that conducts the sound to the inner ear through the bones of the skull.

Bone conductivity technology is utilized by either individual with traditional ear or suffering from hearing loss.

Working

In the Bone conductivity listening devices like headphones works as an eardrum that converts the sound waves to vibration and these vibrations are received by the cochlea via skull bone. The vibration is distributed to the brain as an impulse signal via the auditory nerve. So eardrums aren’t concerned.

Ludwig van Beethoven the famous 18th-century musician has virtually discovered bone conductivity. Beethoven found some way to listen to the sound of his piano by attaching a rod’s one finish to his piano and clenching another finish between his teeth. He received conception of sound vibration once it comes from piano to his jaws. This established that sound may reach our auditory system through another medium while not even use of eardrums.

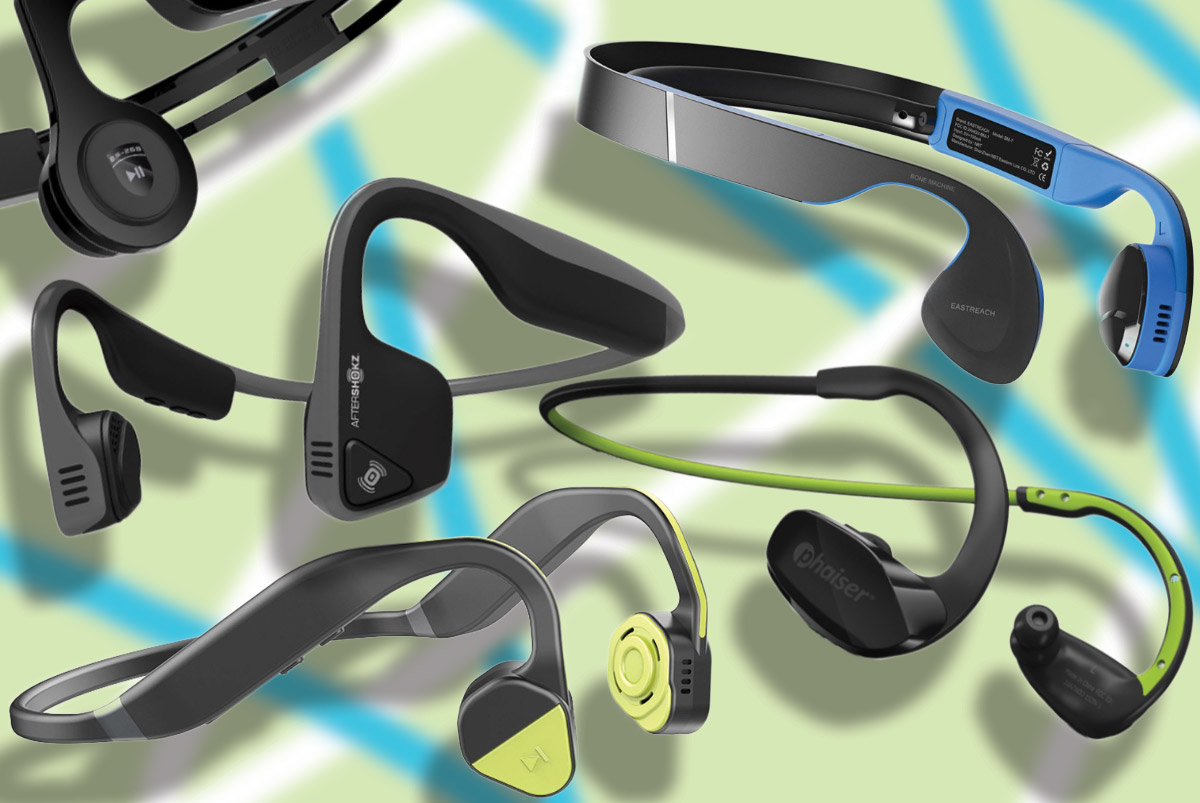

Types of Bone Conduction Devices

There are two types of Bone Conduction devices:

- The first thing is the fixture which is sticks out on the bone that is beside the ear.

- Another type of Bone Conduction device is fully implanted under the skin with the processor already attached to it using the magnet.

How bone conduction headphones work through Directionality

Localizing sounds or identifying the direction they are available from the primarily created possible by having two ears. Rather like having 2 eyes improves depth perception, input from two ears improves our ability to understand speech in noise. Someone with normal hearing in each ear has a higher chance of understanding speech-in-noise than someone with perfect hearing however only one ear.

One ear or one microphone is simply capable of providing directional information by using a reflector. A sound from the front is about 3 dB louder to humans than a sound from behind due to the form of our ears. Turning your back on a sound reduces its volume and will increase the number of sounds before of you. Turning toward a sound improves the signal-to-noise-ratio (SNR) and improves your ability to understand the speech in noise.

Digital hearing aids microphones work methods and Directionality in Hearing Aids

Digital hearing aid microphones work the same method by pointing microphone inputs to front and back. Around the turn of the century, dual-microphone hearing aids went thought. Front and rear microphones and a Digital Sound Processors (DSP) gave hearing aids the power to separate sounds before the user from sounds behind. The hearing aid brain, DSP chooses that one to amplify and that one to ignore.

Directionality, selecting that signal to focus on, makes advanced noise reduction possible. By wirelessly connecting the microphones in each hearing aids the wearer’s sound surroundings is continuously mapped to identify speech sounds. Advanced wireless hearing aids enjoy doubling the number of microphones within the array and therefore the separation between left and right ears. It permits the DSP to steer its focus toward a speech supply and removed from the noise.

By constantly mapping the wearer’s sound environment, DSPs also decide that of its keep algorithms can yield the simplest SNR for this variety of noise. Automatic switching permits hearing aids to adapt to ever-changing sound conditions and choose the optimum environmental program without input from the wearer.

What to Expect

Whether we know it or not but our brains are perpetually examination the inputs from every ear. When we face the source of a sound and we expect to hear it at equal volume in each ear. Most pairs of hearing aids are programmed to the individual’s hearing loss in every ear to maintain a balanced input from the left and right ears. Thereby making the soundscape as natural as possible to the wearer.

However, it’s important for the user to understand that by design, directional technology you will specialize and amplify one sound that is nulling out another. Hearing aids are automatically null a loud noise which is feel like one ear. Sometimes the one toward the noise which is plugged or suddenly not the hearing as well.

For new wearers or somebody that hasn’t been endorsed what to expect, a noticeable drop in hearing level are often disconcerting. Experienced wearers come back to expect blocked feelings to come back and come in noisy environments as their hearing aids automatically switch between environmental programs. Some people are additional sensitive to program changes than others, particularly once hearing aids enter and out of a directional mode. Reducing the number of environmental programs available in the hearing aid DSPs helps alleviate this sensitivity.

Conclusion

Doing these 3 things can improve speech comprehension in noise:

- Face the person or subject you would like to listen to

- Stay within 10 feet of your subject

- Position yourself so noise comes from a unique direction than the topic